Note: Committee members who contributed to the coding and writing of this report are (in approximate order of contribution): Marcus Crede, Iowa State University; Patrick Dunlop, Curtin University; Deborah Powell, University of Guelph; Yicheng Xue, City University of New York; Samantha Weissrock, Weissrock Consulting; and Mable Clark, Central Michigan University

Many industrial and organizational psychologists admit to engaging in questionable and undisclosed research practices, such as p-hacking and HARKing, that increase the likelihood that published results are misleading and false (e.g., John et al., 2012). Indeed, some estimates for the reproducibility of specific research findings reported in industrial and organizational psychology journals are very low (e.g., Crede & Sotola, 2024). Concerns about the replicability and reproducibility of research findings are, of course, not isolated to our field (e.g., Open Science Collaboration, 2015), and these concerns have resulted in calls for researchers to engage in open science practices (e.g., Nosek et al., 2015). Open science practices include a variety of methods that are designed to increase the transparency and replicability of research, and include (but are not limited to) (a) the preregistration of hypotheses, (b) the preregistration of data analytic approaches (e.g., inclusion criteria, use of control variables, treatment of missing data and outliers), (c) making data publicly available to interested readers, and (d) making research materials publicly available. Such practices have become increasingly prevalent in related disciplines such as social psychology, personality psychology, cognitive psychology, and economics, with one recent survey of prominent social scientists (Ferguson et al., 2023) suggesting that open science practices are relatively widespread and that attitudes toward open science practices are highly favorable. Specifically, Ferguson et al. report that approximately half of all polled psychology researchers made data or code publicly available, whereas similar numbers reported having preregistered their hypotheses. Other surveys of open science practices have reported lower prevalence of these practices. For example, Hardwicke et al. (2022) reported that only 7% of articles were characterized by preregistration of hypotheses and that only 14% made data publicly available. Of course, there may be substantial variability in the adoption of these practices across research domains. For example, in the field of false memory research, Wiechert et al. (2024) reported that 75% of articles made data available, whereas preregistration was evident in 25% of articles in 2023.

To assess the degree to which industrial and organizational psychology researchers have adopted open science practices, the 2024–2025 Open Science and Practices Committee of the Society for Industrial and Organizational Psychology conducted a review of all articles published in 2024 in five widely read journals that regularly publish findings that are relevant in the field of industrial and organizational psychology. These journals are (a) Journal of Applied Psychology, (b) Journal of Management, (c) Academy of Management Journal, (d) Personnel Psychology, and (e) Journal of Organizational Behavior. This paper serves as a summary of our findings.

Method

Our full methodology was preregistered at https://osf.io/93ma8/?view_only=ee105cb82f604372941396b816c6dc12, but we also briefly describe the methodology here. Departures from this preregistration are noted below. Volunteer members of the Open Science and Practices Committee served as coders of all empirical articles that were published in the five journals and that met the inclusion criteria.

Inclusion and Exclusion Criteria

Articles were included if they reported statistical analyses of data. Articles reporting on purely qualitative data, purely theoretical or conceptual papers, as well as commentaries and editorials, were excluded.

Coding Categories

All articles were coded for the presence or absence of three open science practices: (a) the preregistration of data, (b) the preregistration of data analytic approaches, and (c) the direct availability of data (i.e., data that was described as being “available upon request” was not coded as directly available). We also coded whether or not the authors attempted to replicate any of their primary findings in a separate sample and whether or not the authors reported having conducted an a priori power analysis to determine the sample size needed to achieve acceptable levels of statistical power.

Departures From Preregistration

Two primary departures from our preregistration are noteworthy. First, because a reasonable case can be made that open science practices such as the preregistration of hypotheses and data analytic approaches are less relevant for meta-analytic reviews, we reported our results both with meta-analytic reviews included and with meta-analytic reviews excluded. Second, our preregistration stated that attempts at replication and a priori power analyses would be treated as open science practices. We realized, upon reflection, that these are not technically open science practices even if they are desirable methodological characteristics for most studies. We therefore report on these separately.

Results

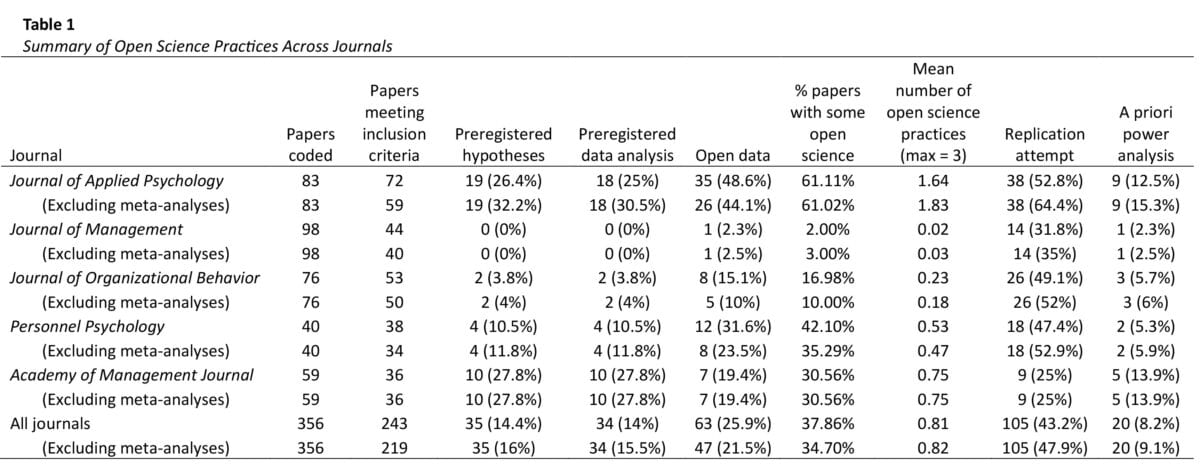

All coding is provided using the OSF link provided earlier. Our results are summarized in Table 1. Across all journals, open science practices were rare, with only about one in seven empirical articles preregistering hypotheses or data analytic approaches. Data were openly available for only about one in four empirical articles. Our coding also revealed very substantial differences across journals. The Journal of Applied Psychology and Academy of Management Journal had by far the highest proportion of articles that were characterized by preregistration of either hypotheses or data analytic approaches, although these proportions were still very low. Open science practices were almost entirely absent in articles published in the Journal of Management and were also very rare in articles published in the Journal of Organizational Behavior. Personnel Psychology had relatively few articles in which hypotheses and data analytic approaches were preregistered, but data were publicly available in almost a third of all articles.

Replication attempts were relatively frequent, with close to half of all empirical articles being characterized by an attempt to replicate some aspects of their findings using additional samples. A priori power analyses were reported very infrequently across all five journals, with less than 10% of all articles reporting any a priori power analyses.

Discussion

Open science practices do not assure the quality of research but can be viewed as one way of improving the ability of consumers of research to judge the likely replicability and reproducibility of reported research findings and to assuage concerns about the presence of p-hacking and HARKing. Open science practices have been championed by psychologists for over a decade (e.g., Nosek et al., 2015), but our findings demonstrate that relatively few researchers in the field of industrial and organizational psychology have adopted these practices, a finding that we find disheartening. Most open science practices are easy to implement and require little investment in time or resources, and we hope that our findings encourage researchers, reviewers, journals, and institutions to engage in open science practices and to encourage others to do the same.

References

Crede, M., & Sotola, L. K. (2024). All is well that replicates well: The replicability of reported moderation and interaction effects in leading organizational sciences journals. Journal of Applied Psychology, 109(10), 1659–1667. https://doi.org/10.1037/apl0001197

Ferguson, J., Littman, R., Christensen, G., Levy Paluck, E., Swanson, N., Wang, Z., Miguel, E., Birke, D., & Pezzuto, J. (2023). Survey of open science practices and attitudes in the social sciences. Nature Communications, 14, 5401. https://doi.org/10.1038/s41467-023-41111-1.

Hardwicke, T. E., Thibault, R. T., Clarke, B., Moodie, N., Crüwell, S., Schiavone, S., Handcock, S. A., Nghiem, K. A., Mody, F., Eerola, T., & Vazire, S. (2022). Prevalence of transparent research practices in psychology: A cross-sectional study of empirical articles published in 2022. Advances in Methods and Practices in Psychological Science, 7(4). doi:10.1177/25152459241283477

John, L. K., Loewenstein, G., & Prelec, D. (2012). Measuring the prevalence of questionable research practices with incentives for truth telling. Psychological Science, 23(5), 524–532. https://doi.org/10.1177/0956797611430953

Nosek, B. A., Alter, G., Banks, G. C., Borsboom, D., Bowman, S. D., Breckler, S. J., Buck, S., Chambers, C. D., Chin, G., Christensen, G., Contestabile, M., Dafoe, A., Eich, E., Freese, J., Glennerster, R., Goroff, D., Green, D. P., Hesse, B., Humphreys, M., . . . Yarkoni, T. (2015). Promoting an open research culture. Science, 348(6242), 1422–1425. https://doi.org/10.1126/science.aab2374

Open Science Collaboration. (2015). Estimating the reproducibility of psychological science. Science, 349(6251), Article aac4716. https://doi.org/10.1126/science.aac4716

Wiechert, S., Leistra, P., Ben-Shakhar, G., Pertzov, Y., & Verschuere, B. (2024). Open science practices in the false memory literature. Memory, 32(8), 1115–1127. https://doi.org/10.1080/09658211.2024.2387108

Volume

63

Number

2

Author

The 2024–2025 SIOP Open Science and Practices Committee

Topic

Publications